Hi all!

I hope that you are doing well, safe and healthy!

In this journal, I would like to share and explain a case study related to one of the great features of Google Kubernetes Engine (GKE). Yup, it is a Backup for GKE. As the name suggests, this feature is intended to back up and restore the workloads in the Kubernetes cluster we have on Google Cloud.

What can be backed up?:

- Configuration: Kubernetes resource including manifest and the cluster state.

- Volume backups: Application data that correspond to PersistentVolumeClaim resources

There are many possible scenarios that we can choose to back up and restore the cluster. For example, we can only back up the configuration and restore it to the newly created cluster, or we can also back up the entire cluster and restore it to the source cluster for disaster recovery that may happen. Besides that, we can also set a scheduler job to run back up automatically. It will save our lives when shocking incidents unexpectedly happen.

From the main documentation here, there are two main components to focus on. A service and an agent. A service has the ability to serve as a controller for Backup for GKE service, and the agent will run automatically in the cluster where backups or restores are performed. Below is the diagram architecture:

As you may know, in the previous journal, I already wrote about Velero. One of the open-source tools which has a similar function to Backup for GKE. So, what is the difference between both? Based on my perspective, Backup for GKE is more simplified and requires less effort than Velero. You do not necessarily download the binary and install it like Velero. Also, Backup for GKE is more integrated into GKE and GCP services as common like IAM, Cloud Storage Bucket, etc.

But, if you were more familiar with Velero as you’re used it in another Kubernetes environment cluster before, and you want to use Backup for GKE now, you need to adapt again . For example, in Backup for GKE you need to create a backup and restore plan as template before doing a backup and restore. Backup for GKE also gives us a challenge when we’ve already enjoy using it and we have to move in another cloud platform, we need to research and adapt again because this feature is native and proprietary.

Backup for GKE is a separate service from GKE, so the pricing too. Based on the documentation here, we will be charged along two items. Backup management and also storage. And the pricing is different based on the region. So be careful!

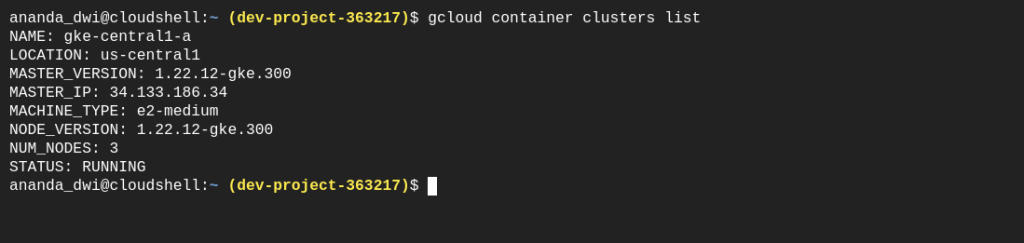

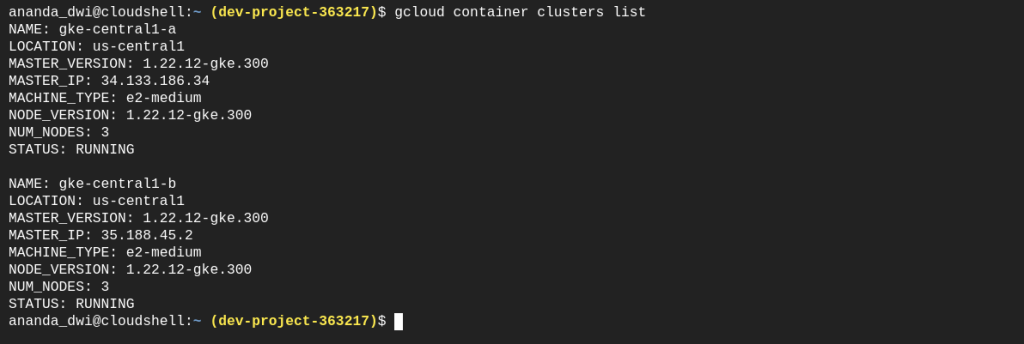

Now. In this journal, we will do a simple backup and restore scenario. I have a GKE cluster in us-central1 named gke-cluster1-a. There, I deploy a wordpress application with a persistent disk provided by PersistentVolumClaim and execute backup regularly every hour. Then I create a new GKE cluster named gke-cluster1-b, and try to restore the backup created before to test and ensure that the backup runs smoothly and successfully.

What will I back up? GKE cluster configuration and wordpress data stored on persistent disk. So, when the restore is complete, wordpress is automatically loaded and can be accessed from outside.

As I writing this journal, Backup for GKE still has a limitation for backup storage(PVC). It is only support for multi-zone (intra-region) and doesn’t support multi-region yet. So, I will only using us-central1 region for backup and restore.

Okay! Let’s jump in!

Here, I will use Google Cloud SDK instead of Google Cloud Console. So, I will define the environment variables first like below:

Make sure the configuration is correct, and then set the default region and zone based on the variables defined before.

We can verify with the command below:

gcloud config list

We need to enable three APIs that will be used for each service. Containers API will be used by GKE for computing, storage API will be used by GKE for persistent disk, and also GKE Backup for backup and restore tasks.

We can verify the enabled services with command below:

gcloud services list --enabled

Next. We have to create a GKE cluster. I create it as a public cluster with a multi-zones configuration in us-central1 region. Do not forget to add –addons=BackupRestore to install Backup for GKE agent in this cluster.

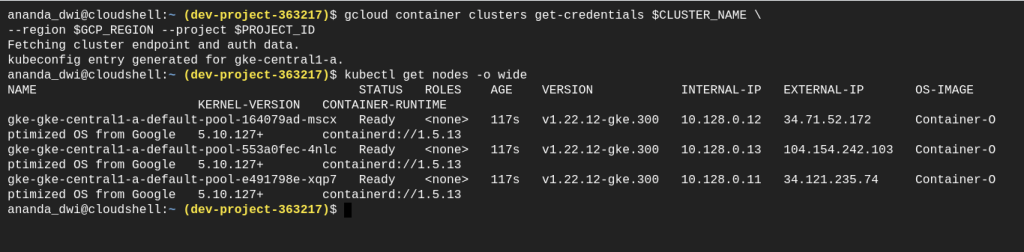

Verify the cluster and make sure the state of all workers is Ready.

gcloud container clusters get-credentials $CLUSTER_NAME \

--region $GCP_REGION --project $PROJECT_ID

kubectl get nodes -o wide

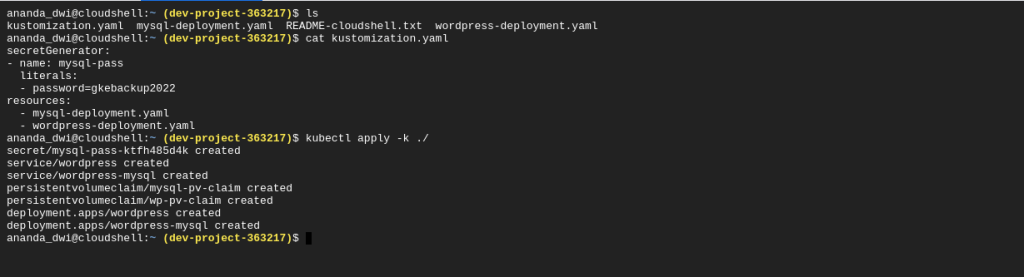

For wordpress application, I am using the tutorial from here. You can follow along, or use your own application instead.

Make sure the pod is running, the external IP in the service appears, and the volume state is bound.

kubectl get pods

kubectl get svc

kubectl get pvc

Try to access the application from browser like below.

Now, we are going to do back up strategy. We will back up the entire kubernetes cluster environment, including data volume and secrets.

Before that, make sure the location can provide it, because not all regions can using command below:

gcloud alpha container backup-restore locations list \

--project $PROJECT_ID

For the backup strategy, we have to create a BackupPlan first. I will create it using environment variables that defined below

# Define env variables for GKE Backup

export BACKUP_PLAN="gke-central1-a-backup"

export LOCATION="us-central1"

export CLUSTER="projects/$PROJECT_ID/locations/$GCP_REGION/clusters/$CLUSTER_NAME"

export RETAIN_DAYS="3"

And then, create a BackupPlan which will back up all namespaces, including secret and volume data. I also set the cron scheduler that will automatically back up every hour and set backup retention only for 3 days.

Verify the backup plan newly created:

gcloud alpha container backup-restore backup-plans list \

--project=$PROJECT_ID \

--location=$LOCATION

We can also verify from the Cloud Console too

How do we back up using BackupPlan created before? Using the command below will create a manually backup named manual-backup1 with the appropriate BackupPlan, location, and project.

Wait and ensure the backup is successful.

All right. After we do the back up the task. We need to test using restore to ensure that the backup is running correctly.

First, I will create a second GKE cluster with the same specification like the cluster one.

Same as BackupPlan, I define the environment variables:

And do the manual restore with appropriate restore plan and the backup created before.

Wait until the restore is successful. Verify the pods is running, PVC are bounds, and the external IP is accessible

References:

- https://cloud.google.com/kubernetes-engine/docs/add-on/backup-for-gke/concepts/backup-for-gke

- https://cloud.google.com/kubernetes-engine/docs/add-on/backup-for-gke/how-to/install

Cheers!